ROAR: Robot Open Autonomous Racing

This website was written by Alfredo, Alvin, Aman, Sihao, and Wesley, and describes what they did for their EECS 106A/206A final project at UC Berkeley during Fall 2020.

Table of contents

Introduction

For our final project, we participated in Berkeley’s ROAR competition, in which teams race 1/10th scale self-driving cars around a track and compete for various prizes. Due to the pandemic, this year’s competition was done through a Carla simulation, and each team was provided with the same starter code to build off of. After submitting our project to this competition, we were fortunate enough to win the Grand Prize in this year’s 2020 ROAR S1 series and have published our code in all of its messy glory on GitHub.

However, the end goal of our project was not only to win a race, but also to create a simplified model of an autonomous mobility-as-a-service system (think Uber or Lyft with self-driving vehicles, or your Tesla driving people around while you work an office job). As such, in addition to driving at high speeds, we were interested in following lanes and traffic signals, detecting and avoiding obstacles in our path, and providing a smooth and stable ride, which made our project more interesting than just a racing project.

Design

We worked in a world where the car knew its current speed, position, and orientation in a world frame (either via speedometer, GPS, and compass or through Carla magic) and was controlled by a steering wheel and a gas pedal (known in Carla as steering and throttle, respectively). There was also a brake pedal, but that was more for decoration than anything. The car was provided a list of waypoints (with coordinates in the world frame) that traveled along the desired route. In our project, these waypoints were given in a text file, but can also be generated by something like Google Maps in the real world. There were lane lines on the road, barriers along the sides of the road, and an occasional stationary car or barrier in the middle of the road to keep things interesting.

Given this setup, our goals were to 1) detect lanes and obstacles, 2) plan a path that roughly follows the given waypoints while staying in our lane and avoiding obstacles, and 3) control the car so that it drives smoothly and accurately along our planned path. For the race, we didn’t worry about lanes and obstacles and instead modified our waypoints and controlled the car so that it went as fast as possible with minimal crashing.

With these goals in mind, we split our tasks into four parts – sensing, planning, controlling, and racing – and made the following design choices:

Sensing and Planning

The starter code directly used the pre-recorded waypoints as its “path planner” and was able to run a loop without the assistance of perception. However, there were some zig-zags in the path due to the poor quality of the waypoints. In order to overcome this, we optimized the path by having the car follow the lane center. We used a front RGB camera to detect white and yellow lane segments in front of the car and a front depth camera to calculate their 3-D coordinates. Instead of just following lanes, which sometimes cannot be detected, we used lanes as an augmentation to “correct” improper waypoints. That is, the input of the controller was decided by a combination of lane center and pre-recorded waypoints. This design allowed us to generate a smooth path when lanes were visible and follow the waypoints when lanes were not visible.

We also decided to use the front RGB camera’s image feed to detect obstacles in the car’s way, such as an NPC car on the track, or the walls on the sides on the track. We could then use these signals to plan the car’s immediate route so as to avoid these obstacles. For instance, if the camera showed a group of cars in front, the car could automatically find a path that takes it around or between the other cars and follow it.

Controlling

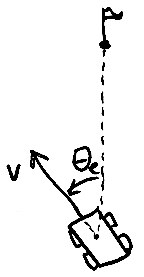

The controller receives the coordinates of the next waypoint and the desired speed from the path planner. Using this information, the controller can find the difference in speed between our current speed and target speed and the difference in angle between our current trajectory and the next waypoint (see figure below), and select steering and throttle values to correct any deviation.

The starter code came with two separate PID controllers. However, we found this difficult to tune due to the mental mismatch between the quantitative Kp, Ki, Kd parameters and the qualitative desired behavior (e.g. “more sensitive steering”). Additionally, there was no coordination between steering and throttle, which resulted in very aggressive turns, and the PID controllers did not account for the drag of the car, which resulted in the car going about 10% slower than the target speed.

To correct these issues, we made two notable changes:

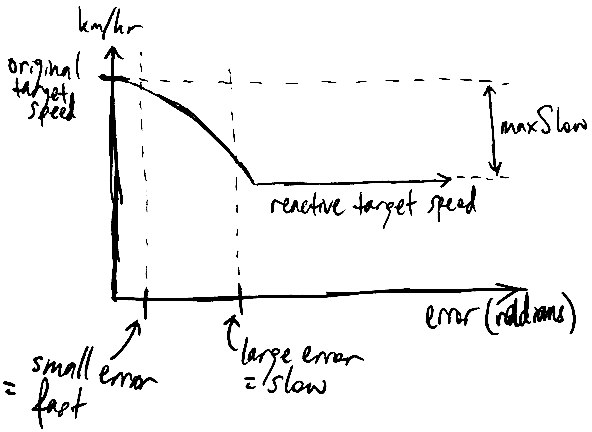

- We added reactive speed control, which reduced the target speed when there was a large error in direction. This allowed us to slow down as we turned and resulted in smoother turns and better recovery when off-track with a small trade-off in speed.

- We designed and implemented an LQR controller to use instead of the PID controllers. This enabled the controller to account for the car’s dynamics (e.g. drag) when selecting steering and throttle values, which resulted in more accurate adherence to the planned path. The parameters used in the LQR were also more intuitive to tune than the parameters in the PID. For instance, if the car was veering back and forth from over-steering, we could increase the cost of steering in the LQR controller, and the controller would steer less aggressively. This ease of tuning allowed us to get significantly better performance and reach higher speeds when compared to the PID controllers. However, although the LQR had better performance than the PID, it was also significantly more complex. We had to spend a lot of time determining the system dynamics of the car and finagling the LQR math to converge to a non-zero desired trajectory. The grimy details of the LQR controller can be found in its implementation section.

Racing

For the racing aspect, we optimized our route and speed based on the given waypoints. We optimized our controllers for high speeds and implemented a waypoint smoothing and look-ahead algorithm to take turns more smoothly, cut in on the corners, and adjust the speed accordingly.

Implementation

Sensing

Lane Detection

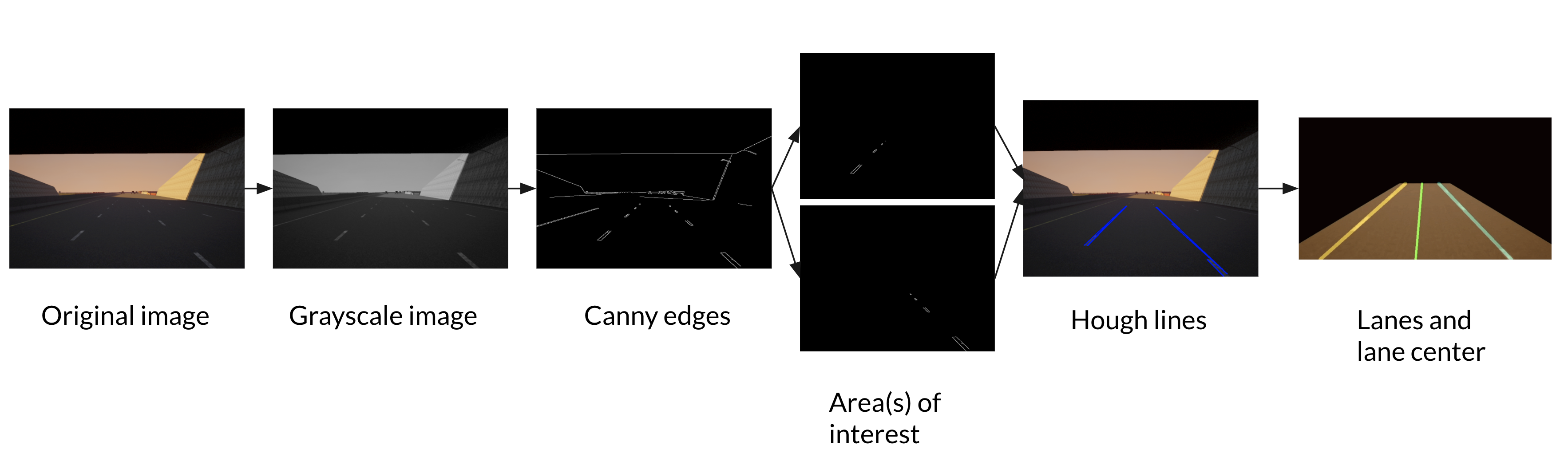

The lane detector takes in the images captured by the front RGB and depth cameras as input and calculates the 3-D world coordinates of the left lane, right lane, and lane center. At each time step, the lane detection algorithm does the following:

- convert the original RGB image to a grayscale image,

- calculate the canny edges of the image,

- cut out two triangle-shaped areas of interest, one on the left bottom part of the image, another on the right bottom part,

- calculate Hough lines from each image and take their average to form two straight lines as our detected lanes,

- calculate world coordinates of lanes using depth camera image, and

- use the average of world coordinates of the left and right lanes as the lane center for path planning.

Obstacle Detection

The obstacle detection algorithm uses the front RGB camera’s image feed as its input and identifies any obstacles in the camera’s field of view at each time step. Examples of obstacles include other cars, the walls on both sides of the track, and barricades in the middle of the road.

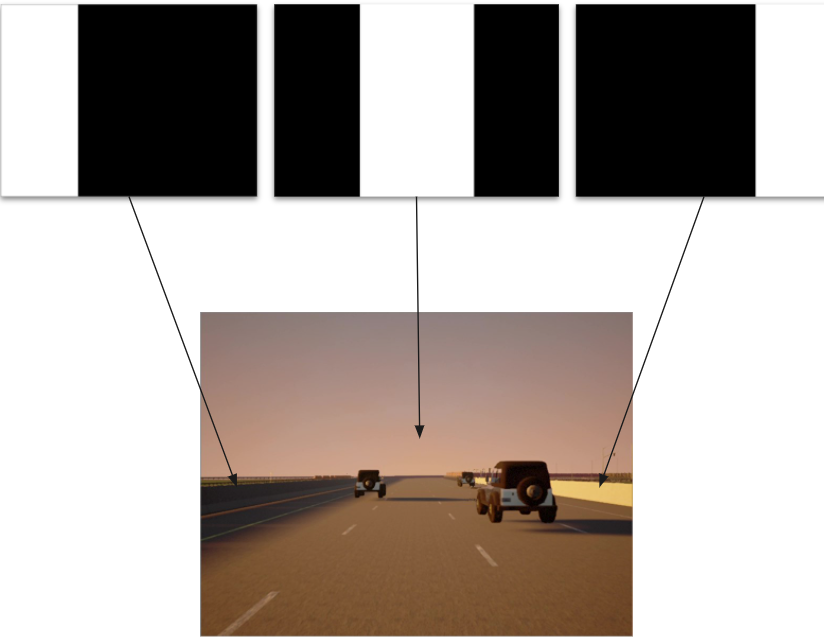

We initially tried to use the entire input image from the RGB camera as is. However, we quickly ran into problems with this approach because we could not figure out how to separate the processes to detect walls and other objects on the track, which resulted in our algorithm detecting walls and cars as one ‘obstacle’.

After giving it some thought, we realized something obvious - the walls are always on the sides of track. To take advantage of this fact, we used three masks on every input image before processing it further - left and right masks (to focus on detecting walls) and a middle mask (for obstacles directly on the track). This increased the accuracy of detection significantly.

Once the masks were applied, we used classical image segmentation and processing techniques like converting the image to grayscale, applying Gaussian blurring and thresholding, and drawing contours around objects of interest. We had to tune many of the parameters in the thresholding and contour functions, which was a series of fruitful experiments that improved our detection algorithm as well.

In summary, our algorithm has the following key steps:

Masking

- Divide the image into three areas of interest - left, right, and center.

- Apply bitwise masks on each of the areas of interest to segment the relevant parts of the image:

- Left and Right masks to detect walls.

- Center mask to detect objects on the track.

- Process both images and merge them together to detect obstacles.

Processing

- Convert the original RGB image to a grayscale image. Thanks Lab 6.

- Blur the image using a Gaussian kernel so that the image is smoothened, and to counteract the effect of the car’s moving camera.

- Apply adaptive thresholding on the blurred image to distinguish between objects of interest and the background. Thanks Lab 6.

- Find and draw rectangular contours to identify objects of interest.

| Camera Feed | Thresholding Applied |

|---|---|

|

|

Planning

Lane Following

The ROAR/planning_module/local_planner/lane_following_local_planner.py script contains the main logic of our lane following planner. We defined a variable, confidence, which is a scalar between 0 and 1. It decays exponentially when a lane is not detected, and is reset to 1 when both are detected. We calculated the target location based on a weighted sum of the mid-point of the lane center and the next waypoint. We also limited the target speed by confidence. The location of the next waypoint and target speed were then fed into our controller. The pseudocode of the lane following algorithm is as follows:

def follow_lane(waypoints, α: confidence decay rate 0 ~ 1, β: speed limit factor > 0):

confidence = 1

for each step:

left_lane, right_lane, lane_center = detect_lanes_from_camera_input()

if left_lane or right_lane not detected:

confidence = confidence * α

else:

confidence = 1

target_location = mid_point(lane_center) * confidence +

get_next_waypoint(waypoints) * (1 - confidence)

target_speed = maximum_speed * exp((1 - confidence) * -β)

controller.run(target_location, target_speed)

Controlling

We made two additions to the controller: reactive speed control and LQR maths.

Reactive speed control

The reactive speed control is a straightforward concept that boils down to one line of code in ROAR/control_module/lqr_controller.py:

target_speed *= max((math.cos(self.errBoi) - 1) * self.slowdown, -self.maxSlow) + 1

Here, self.errBoi describes the error in the direction the car is going, self.slowdown is a multiplier to increase or decrease how much we slow down given a particular error in direction, and self.maxSlow is the maximum amount that we want to slow (just so we don’t come to a complete stop when we are very off-track). These three variables are all defined in the ROAR_Sim/configurations/lqr_config.json configuration file. There are a couple of moving parts, so hopefully, the graphical representation below is helpful.

LQR maths

The LQR controller, on the other hand, is a bit more complicated. The lqr_controller.py script contains the guts of our LQR controller, which uses the parameters stored in the lqr_config.json file. Those who are familiar with LQR controllers can check out those files directly. For those unfamiliar with LQR controllers, we have written up an explanation of how an LQR controller works and how we built ours.

LQR controller basics

An LQR controller consists of two main components:

- system dynamics, and

- costs

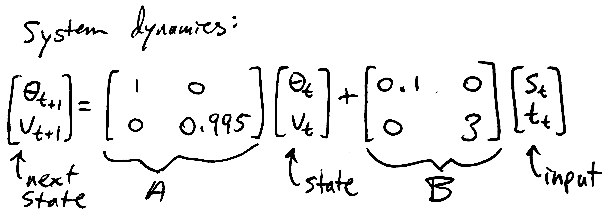

The system dynamics is a linear model of how the system (i.e. a self-driving car) changes state (i.e. speed and direction of motion) based on its current state and external inputs (i.e. steering and throttle). This is the “linear” part of the linear quadratic regulator. The discrete-time linear model used in our LQR controller is shown below, where each time step was 0.03 seconds (as determined by Carla magic). We will explain later how we got those exact numbers in the matrices, but for now the important thing is we have an A and B matrix that describe the intrinsic physics of the car.

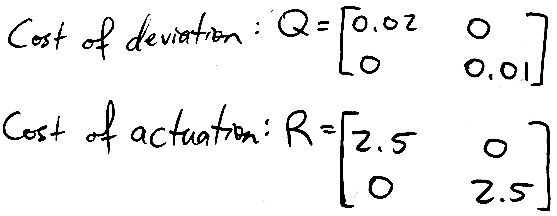

Next up are the costs. The costs are the knobs we can turn to change the qualitative behavior of our controller. There are two types of costs: cost of deviation Q and cost of actuation R. The cost matrices used in our first functional LQR controller are shown below. The cost of deviation is applied to the state x of the car (angle and speed) and the cost of actuation is applied to the inputs u to the car (steering and throttle). By inspecting these matrices, we can see that we care a bit more about going in the right direction than going at the right speed (since the 0.02 cost of angle is more than the 0.01 cost of speed), and we are very gentle with the steering and throttle (given the relatively high cost of 2.5 for both steering and throttle).

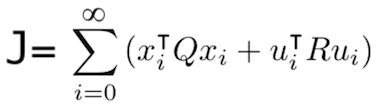

To be more mathy, these costs are used to form a cost function J, shown below. We see in J that the costs are multiplied by the states and inputs squared and summed up over an infinite time horizon. This is the “quadratic” part of the linear quadratic regulator. Our goal now is to select a sequence of inputs u (i.e. steering and throttle values) that minimizes this cost.

To calculate the inputs that minimize our cost function, we solve this thing called a discrete-time algebraic Riccati equation using our A, B, Q, and R matrices to get some P matrix, and use that P matrix along with our system dynamics A and B to generate a linear feedback matrix K such that u = -Kx is the optimal input at each time step. This math is kind of gross and does not need to be understood in order to use the LQR, so we will not go into detail here. The code for this optimization is also very succinct (literally two lines because it utilizes the SciPy library), but if you’d like to read those two lines and consider the depth of their meaning, check out the _dlqr method in lqr_controller.py. The upshot is that by minimizing J with LQR math, we get steering and throttle values that can control the car very effectively.

“Wait a second,” you say, “I’m a bit confused about something. If the cost of deviation is multiplied by the square of the state, doesn’t that mean by minimizing the costs, we drive the state to zero? That means that the LQR controller just makes the car face a certain direction and while going 0 km/hr! Doesn’t seem very race-worthy to me.” And you are completely correct! An LQR naturally pushes everything (including the speed of the car) to zero, which is the “regulator” part of the linear quadratic regulator. In order to follow a desired trajectory and use the LQR as a controller, we need to change where “zero” is. For the direction the car goes in, this is reasonably straightforward – zero is just the direction to the next waypoint. Speed is a bit more tricky, because the drag of the car is proportional to the speed, so the amount of throttle needed to maintain a particular speed changes depending on said speed. For our LQR controller, we calculate the desired throttle needed to maintain the desired speed, find the difference between our current speed and the desired speed, and run the LQR on that difference in speed to drive it to zero. We then add the throttle value the LQR spits back out to the desired throttle we found earlier and use that sum as our next input. In equilibrium, this results in the car going in the desired direction at the desired speed. This is all implemented in lqr_controller.py, so take a look at that if you are interested in the actual code.

That’s it! Now you kind of know how an LQR controller works! “But wait,” you say, “you’re absolutely fantastic at explaining things and everything makes perfect sense, but where did the numbers in those A, B, Q, and R matrices come from? How would I come up with them myself?” Glad you asked!

A, B, Q, and R matrices

Let’s start with the system dynamics A and B since they were by far the most difficult to determine. Before we can even think about the matrices themselves, we have to decide what state variables to include in our linear model (e.g. position, velocity, acceleration, jerk, direction, angular velocity, angular acceleration, roll, pitch, yaw, derivatives of roll, pitch, yaw, etc, etc, etc) based on what linear relations (if any) exists between them and each other and what linear effects the inputs have on them. To explore this, we recorded the position, velocity, and orientation of the car, the position of the next waypoint, and the inputs to the car as it drove around the track using the tuned PID controllers. These data files (along with the Jupyter Notebooks used to explore the data) can be found in the carla_driving_data directory of our GitHub repository.

Once we collected this data, we tossed it into a Jupyter Notebook and graphed the candidate state variables pairwise against each other and against the inputs to identify any linear trends. We initially selected direction, angular velocity, speed, and acceleration as our state variables and ran some linear regressions to get rough estimates of what the numbers should be in the A and B matrices. We then constructed our A and B matrices from those regressions, arbitrarily instantiated some Q and R matrices with positive values along the diagonal, and finagled the LQR math so that we could follow a desired trajectory based on simulated system behavior.

After the math behind our LQR controller was working, we implemented a Carla agent and controller for the LQR and tossed our matrix values into a configuration file. Then we ran a lot of trials to refine the values in our A and B matrices. We ended up simplifying the system dynamics to include only two state variables, and we tuned the drag of the car and the effects of steering and throttle until the car reached equilibrium at the target speed and didn’t seem to over/under correct when steering. After much eyeballing, we threw our hands up and said, “Good enough!” and thus our A and B matrices were born.

Finally, all that was left to do was to tune our Q and R matrices until the car did what we wanted it to do. Our initial values in Q and R were grossly suboptimal, but through some experimentation, we found that decreasing the values in Q and R by a couple orders of magnitude resulted in finer adjustments of the steering and throttle, and keeping the cost of actuation relatively high resulted in a smooth ride as desired. Once the car made a smooth lap around the track, we smiled, pushed the code to the repo, and went to bed – our LQR controller’s big debut at 4am some random Thursday morning.

As we increased the speed of the car and tried different path planning methods, we tuned the costs of the LQR controller to get the behavior we want. Generally, we want the car to be decently reactive to changes in direction but not so much so that it would wobble all over the track. Fortunately, the LQR controller is pretty robust to different driving and planning conditions, but some fine-tuning definitely improves its performance around the tricky turns.

Racing

Smooth Waypoint Following Planner

The ROAR/planning_module/local_planner/smooth_waypoint_following_local_planner.py script contains the main logic for our smooth waypoint lookahead path planner. From our waypoints, we look ahead smooth_factor number of waypoints for path smoothing and speed_lookahead number of waypoints to define our target speed factor. The target waypoint provided to the controller is obtained by averaging the next smooth_factor waypoints. However, this can be quite computationally expensive, so we uniformly sample some of the waypoints to use in calculations. Then, for our proactive speed control, we compute the angle between our car’s current orientation and the position of the waypoint speed_lookahead steps ahead. When this angle increases, we know we are nearing a turn. Finally, our target speed for our controller is multiplied by a factor that is decreases linearly when as this angle increases, letting us slow down before the turn. The pseudocode is as follows:

def next_waypoint_smooth_and_speed(self, smooth_factor: int, speed_lookahead:int ) -> (Transform, float):

sample_points = range(0, smooth_factor, smooth_factor // sample_factor)

num_points = len(sample_points)

mean_location = reduce(lambda x, y: x + y, (self.way_points_queue[i].location for i in sample_points)) / num_points

mean_orientation = reduce(lambda x, y: x + y, (self.way_points_queue[i].rotation for i in sample_points)) / num_points

target_waypoint = Transform(location=mean_location, rotation=mean_rotation)

angle_difference = self._calculate_angle_error(self.way_points_queue[speed_lookahead])

speed_multiplier = max(min_speed_factor, (1.0 - alpha * angle_difference / np.pi))

return target_waypoint, speed_multiplier

The resulting waypoint and speed are then passed into the LQR controller for execution.

Results

Here we get to show our fun little videos of the car driving!

Sensing and Planning

Our car successfully detects and follows lanes at lower speeds (~50 km/hr). Below is a side-by-side comparison of two cars using the same (somewhat sensitive) LQR parameters. The one on the left directly follows the waypoints, while the one on the right includes lane-keeping. Notice the wild swerving when the car attempts to turn without any lane-keeping, and the lack thereof with the help of lane-keeping.

| No Lane Keeping | Lane Keeping |

|---|---|

|

|

We found that it was difficult to keep track of lanes denoted by dashed lines because the dashes seen by the camera were short and spaced rather far apart. This problem was exacerbated during turns, where the dashed lines in a lane were not even aligned with each other, making it difficult to stay in our lane while turning at higher speeds.

We were also able to detect obstacles in front of the car, as seen below. However, we were unable to implement a path planning algorithm for obstacle avoidance due to lack of time.

Controlling

The LQR controller performed notably better than the original PID controllers. Below we can see two instances of the car after we tuned the controller to the best of our ability. The one on the left is using the PID controllers, while the one on the right is using the LQR controller. Notice the behavior of the cars after the turn. The car driven by the LQR controller is notably more stable than the car driven by the PID controllers.

| PID Controller | LQR Controller |

|---|---|

|

|

Racing

The waypoint-lookahead planner allowed us to take turns more smoothly and cut in on the corners. Below are two instances of the car taking a turn. The one on the left is without the lookahead, while the one on the right uses waypoint lookahead.

| No waypoint lookahead smoothing | Waypoint lookahead smoothing |

|---|---|

|

|

We found that the performance of the lookahead planner was dependent on the speed of the car, the placement of the waypoints, and the shape of the turn. Thus, we do not know how well this planner will perform on an arbitrary track that we have not seen before. However, as seen above, if we have the opportunity to tune the speed of the car and the amount we look ahead, we can achieve very good turns.

We submitted our car to the ROAR competition and won the Grand Prize for this year’s 2020 ROAR S1 series, reaching a max speed of 188 km/hr along a figure-eight shaped track. A video of our car running a single lap can be found below. There are some tight corners and some instances where the car slows down unnecessarily, but overall the racing portion performed quite well.

Conclusion

We successfully achieved most of our goals for this project! Our lane detection and obstacle detection are functional, and we have implemented lane-keeping in our path planner. The LQR controller provides us with a smooth ride, and our waypoint-lookahead planner is very suitable for racing at high speeds. It is unfortunate that we were unable to implement obstacle avoidance, but given our current work, our car can pick up a rider and drive to a desired drop-off location while following lanes! Granted, it will be running through red lights and demolishing pedestrians, but that just means it works best at, like, 3 am!

One of our biggest difficulties was a lack of regular communication between our team members. Of course, there were design questions of what we wanted our project to do and how each person’s work fit into the whole, and there were technical challenges of how to actually implement what we want, but we feel that much of that could have been efficiently resolved through a brainstorming session, or having two or three people troubleshooting a problem, or catching up more frequently on what each person has done and how that affects the parts of the project that other people are working on. Instead, after distributing tasks and submitting our proposal, our group didn’t meet up again (or even talk to each other) for the next five weeks. During that time, a couple of us started working on our tasks, got stuck, and gave up without asking the rest of the group for help. Other group members were busy with other coursework and research and did not make any notable progress. By the time we finally met up again, there was only one and a half weeks left before the presentation and ROAR competition, and we only had two partially-functional components. After that, we met more frequently, posted our progress and updates in our group chat, and brainstormed and troubleshot with each other as necessary, which let us make tremendous progress during that last week and create a project worth presenting on and competing with. Given all that we accomplished, we wonder how much more we could have done if we had just talked to each other earlier.

With additional time, we would have focused on merging obstacle detection with some form of planning so that the car would be able to avoid obstacles such as other vehicles, the sides of the track, and barricades on the track. We also wanted to implement image segmentation using the RGB camera feed from the car, which would have allowed us to distinguish the track from all the other components of the feed, and optimize the car’s speed using this additional signal along with our existing lane detection and waypoint planning algorithms. Since we already used a masking approach to differentiate different regions of the image, this would have been quite possible.

Team

Alfredo De Goyeneche. Alfredo is a first-year Ph.D. student at the EECS department in the Control, Intelligent Systems, and Robotics area, currently working with Prof. Miki Lustig in automation and biofeedback for MRI (Magnetic Resonance Imaging). He graduated in 2018 from Pontificia Universidad Catolica de Chile with a BS in electrical engineering. After that, he spent 2 years in a startup - HeartVista - as a Machine Learning Engineer working on self-driving MRIs. For this project, he focused on the racing aspect. He designed and implemented the Smooth Waypoint Follower Planner, tuned the controllers and planning module for high speeds, coordinated the GitHub repository, and assisted in debugging aspects of other areas.

Alvin Tan. Alvin is a first-year electrical engineering PhD student at UC Berkeley. He graduated from Northwestern University in 2020 with a BS in computer engineering, economics, and math, and is currently researching wireless sensor networks with Prabal Dutta in Lab11. For this project, he designed and implemented the LQR controller, helped other group members troubleshoot their work, and coordinated the overall progress of the project and its deliverables.

Aman Sidhant. Aman is a senior undergraduate majoring in EECS. He has industry experience using computer vision at SAP Research. He has also worked more broadly with machine learning, deep learning, optimization, and control theory through coursework and projects, and thinks robots are cool. Aman primarily worked with Wesley on obstacle detection as part of the perception module of this project.

Sihao Chen. Sihao is a Master of Engineering student in UC Berkeley EECS. He graduated from Northeastern University (China) in 2020 with a B.Eng. in software engineering. He is in the assistive mouse capstone project group supervised by Prof. Brian A. Barsky. He led the perception part of this project, designed and implemented the lane detector and the lane following planner, and assisted with obstacle detection.

Wesley Wang. Wesley is a senior undergraduate majoring in CS. He has a broad knowledge of computer science knowledge such as machine learning, optimization, and algorithms from classes taken at Berkeley. He primarily worked on obstacle detection within the perception model with assistance from Aman.

Additional Materials

For the readers’ convenience, we will drop some links down here for the general ROAR competition, the ROAR starter code, and our own code that we wrote for this project. Also, while working on this project, we often found ourselves at a loss at what to do next or how to do it, which was a rather frustrating experience. Every time we hit one of these blocks, we would watch this inspirational video, and it would always help us keep on keeping on, so we wanted to share it with all of y’all! We hope that it can help y’all out when y’all are having a tough time as well.

Lastly, a BIG thanks to our professor Sastry and our wonderful TAs Ritika, Tiffany, and Amay for their support on this project! We could not have gotten this far without y’all. Thank you very much, and we wish y’all a Merry Christmas and a Happy New Year!